In our earlier whitepaper, we explored how AI adoption is reshaping customer contact – an area in which great risk and reward intersect. Six months on, the case for agentic AI has grown stronger, particularly in sensitive customer interactions where honesty and trust are essential.

Drawing on academic research and industry data, we now understand that AI can do more than just automate processes. It can unlock “more honest” conversations, especially in situations where fear of judgment by others or shame might inhibit disclosure.

This isn’t just a theory! Research from Stanford, MIT CSAIL, and NUS Business School reveals a striking trend: people are more open with AI than with human agents in contexts like mental health, financial distress, addiction, and relationship issues.

Why?

Because AI doesn’t judge.

Stanford calls this the social desirability bias, where people moderate their speech based on perceived perceptions. Removing this perception leads to greater honesty.

The ‘confession booth effect’, a term coined by NUS, also demonstrates this. In anonymised AI conversations, people admitted behaviours they hid from humans, like not reading terms and conditions or sharing passwords. In an insurance use case, initial disclosure accuracy rose by 40% when AI agents led the conversation.

MIT CSAIL found that people expend less mental energy managing impressions when talking to AI. This frees cognitive bandwidth for self-reflection and better problem-solving.

Now taking this approach, judgment-free AI agents can be implemented in high-trust, high-friction industries, such as mental health screening, legal triage, financial support, and trust & safety work. These artificial agents are more scalable and effective than humans.

The paradoxical truth is that people often feel more ‘heard’ by AI than by humans, because they don’t feel the need to pretend.

Yet traditional AI platforms struggle with emotional nuance, privacy, and secure escalation. It is essential to overcome these hurdles with strict compliance (GDPR+), contextual accuracy, and human-aligned escalation protocols.

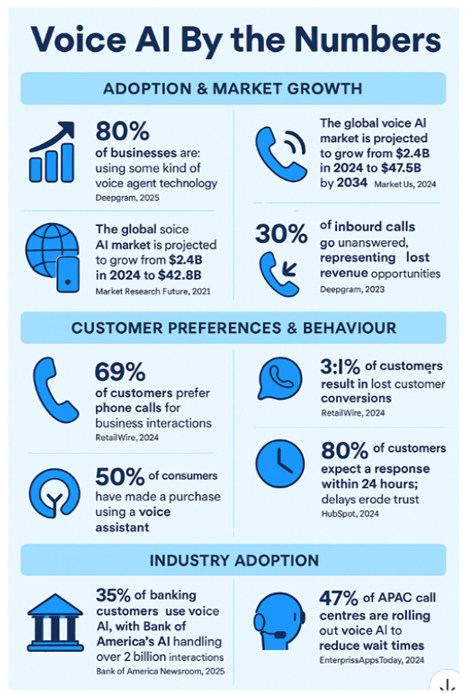

The case for AI grows when combined with market data

Perhaps considered in the context of the long forecast demise of voice as a channel, recent research from the Synthflow white paper brings together a number of key usage stats which when considered with the findings of the academic research support the notion that AI voice will be here to stay?

What does all this mean for CX leaders?

- Trust is key. Sensitive topics require the psychological safety of customers to be part your AI solution.

- Design your AI agent around customer fears, not just FAQs.

- Measure resolution accuracy and emotional sentiment, not just AHT.

- Voice AI, when built correctly, can be the most honest channel for customers.

- Integrated agentic AI ensures a consistent experience across platforms.

Customers don’t need AI to sound human. They need AI to “feel safe”. The leading approach to agentic AI will redefine what honest, efficient, and compliant customer interactions can be – especially in a world in which truth drives trust, and trust drives revenue.